A Concept, Implementation, Benefits and Challenges of Text Spotting: Vehicle License Plate Recognition Case

Ahmad Husaini

∙15 March 2024

Introduction

The Starting Point

In the era of increasing digitization and information overload, the need for efficient and accurate text recognition from images and videos has become increasingly crucial. Text spotting, the automated process of detecting and recognizing text within visual data, plays a crucial role in various applications such as document analysis, image retrieval, augmented reality, and accessibility for the visually impaired.

However, several challenges persist in achieving robust and reliable text spotting in real-world scenarios, particularly in real-world scenarios such as Vehicle License Plate Recognition (VLPR) cases. Variations in text size, fonts, orientations, complex backgrounds, and low-resolution images pose significant hurdles. Moreover, the need for real-time processing and the ability to handle diverse languages and scripts add additional layers of complexity to the text spotting problem. Addressing these challenges is essential to unlock the full potential of text spotting technology in practical applications, especially within the context of Vehicle License Plate Recognition

Statement Problem

To investigate the practicality associated with the implementation of text spotting in Vehicle License Plate Recognition (VLPR) systems, with a focus on understanding the impact on accuracy, real-world benefits, and addressing the challenges.

Objectives

Text spotting in Vehicle License Plate Recognition (VLPR) involves the identification and extraction of text information from license plates on vehicles. It is a crucial component of automatic license plate recognition systems, aiming to accurately detect and interpret the alphanumeric characters on license plates.

Scope and Limitation

The aim of this writing is to emphasize the exploration of technical details related to text spotting for Vehicle License Plate Recognition (VLPR).

Concept of Text Spotting

Text spotting in Vehicle License Plate Recognition (VLPR) involves the identification and extraction of text information from license plates on vehicles. It is a crucial component of automatic license plate recognition systems, aiming to accurately detect and interpret the alphanumeric characters on license plates.

The text spotting process typically involves two main steps:

-

Text Detection: Identifying the regions in an image or video frame where text is present. This step involves locating bounding boxes or outlines around text instances. Various techniques, including traditional computer vision methods and deep learning approaches, can be employed for text detection.

-

Text Recognition (Optical Character Recognition - OCR): Once the text regions are detected, the next step is to recognize the actual content of the text within those regions. OCR algorithms are commonly used for this purpose, and they are designed to convert the visual representation of text into machine-readable text.

Text spotting can be challenging due to variations in text size, orientation, font, background clutter, and other factors. Deep learning techniques, especially convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown significant success in addressing these challenges and improving the accuracy of text spotting systems.

Applications of text spotting include reading text from images, extracting information from documents, assisting visually impaired individuals by converting text in the environment into speech, and enhancing content-based image and video retrieval systems.

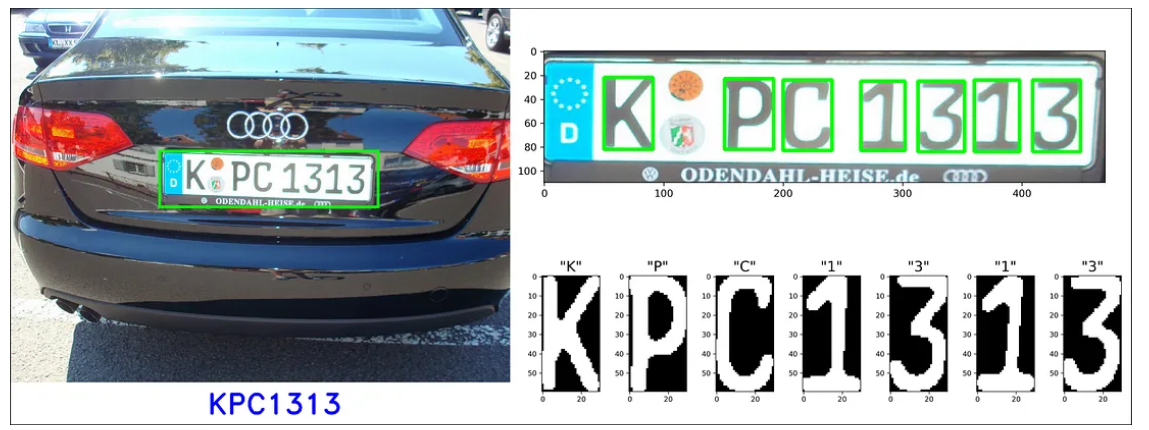

Implementation of Text Spotting in the case of Vehicle License Plate Recognition

- The implementation of text spotting in VLPR typically involves the following steps: Image Acquisition: Capture images or video frames containing vehicles with license plates.

The process begins with capturing images or video frames containing vehicles with license plates. This can be done using cameras installed at various locations, such as traffic intersections, toll booths, or parking lots.

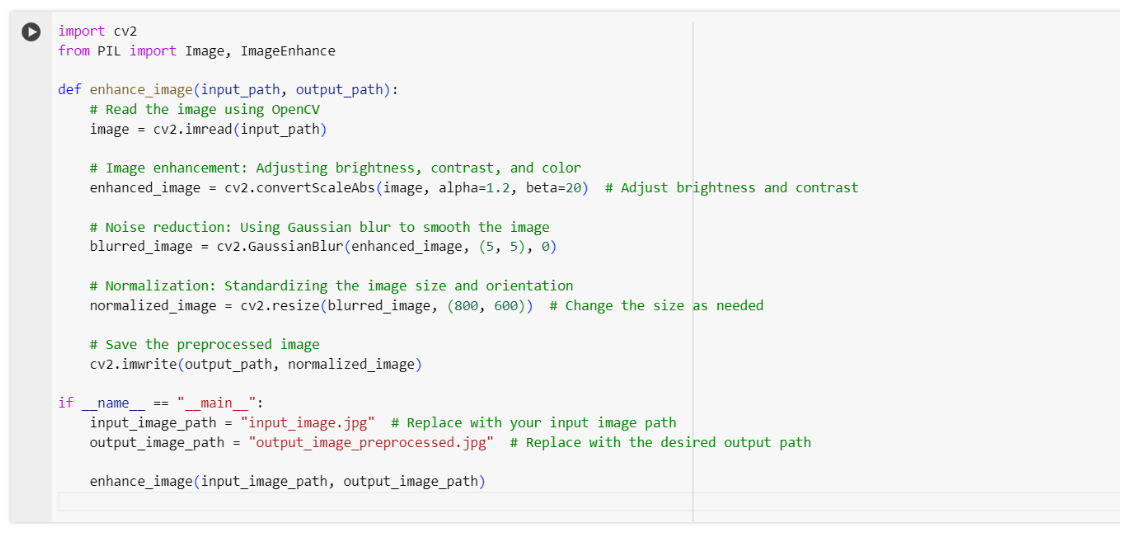

- Preprocessing: Enhance image quality, adjust lighting conditions, and perform noise reduction to improve text visibility.

Before the image is fed into the text spotting system, preprocessing steps are applied to enhance the quality of the image. This may include:

- Image Enhancement: Adjusting brightness, contrast, and color to improve overall visibility

- Noise Reduction: Removing any unwanted artifacts or distortions in the image that could affect the accuracy of text recognition.

- Normalization: Standardizing the image size and orientation to ensure consistency in processing.

Image processing and enhancement can be done using various Python libraries such as OpenCV and Pillow. Here's an example code snippet that demonstrates some basic image preprocessing techniques:

- Localization: Use object detection techniques to locate the region of interest (ROI) where the license plate is present in the image.

Object detection techniques are employed to locate the region of interest (ROI) where the license plate is present in the image. Common methods include:

- Convolutional Neural Networks: Deep learning models trained on large datasets to identify and locate objects within images. Region-based CNNs (R-CNN) or Single Shot Multibox Detector (SSD) are popular choices.

- Bounding Box Regression: Predicting bounding boxes around the license plate region.

- Text Extraction: Employ Optical Character Recognition (OCR) or deep learning models to recognize and extract text from the localized region.

-

Once the license plate region is identified, the next step is to extract the alphanumeric characters. This is typically achieved using Optical Character Recognition (OCR) or deep learning models: OCR Algorithms: Traditional OCR algorithms may be used, especially when the text is well-structured and follows a standard font. These algorithms involve feature extraction, character segmentation, and recognition

-

Deep Learning Models: Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, or Transformer-based models can be employed for end-to-end text recognition, allowing for greater flexibility in handling variations in font, size, and orientation.

- Post-processing: Validate and refine the extracted text to improve accuracy and eliminate false positives

To improve accuracy and eliminate false positives, post-processing techniques are applied:

-

Text Verification: Validating the extracted text against predefined patterns or dictionaries to filter out incorrect interpretations

-

Error Correction: Addressing OCR errors by considering contextual information or leveraging language models to correct misinterpreted characters

- Machine Learning Training:

The success of the text spotting system heavily depends on training models with large and diverse datasets:

-

Dataset Preparation: Curating a dataset with a wide range of license plate images, considering variations in lighting conditions, fonts, and backgrounds.

-

Model Training: Using labeled data to train the machine learning models. This involves adjusting model parameters to minimize the difference between predicted and actual outputs.

- Machine Learning Evaluation:

- Assess the system's performance using evaluation metrics such as precision, recall, and F1 score

- Fine-tune the model based on evaluation results.

Remember to continually update and improve the system as needed, especially as new data becomes available and technology evolves. Plus, this is a simplified example, and for a robust system, you might need to explore and implement more advanced techniques, such as using deep learning models for localization and text extraction, handling variations in font and orientation, and creating a well-curated dataset for training.

Benefits and Challenges

- Benefits

- Enhanced Accuracy: Text spotting technology contributes to improved accuracy in recognizing alphanumeric characters on license plates, leading to more reliable Vehicle License Plate Recognition (VLPR) systems

- Efficient Automation: The automated process of text spotting streamlines the identification and extraction of text information from license plates, reducing the need for manual intervention and enhancing overall operational efficiency.

- Realtime Processing: Implementation of text spotting facilitates real-time processing of images and video frames, enabling swift identification and interpretation of license plate information.

- Challenges

- Variability in Text Characteristics: Challenges arise due to variations in text size, orientation, font, and background clutter on license plates, requiring robust solutions to handle these complexities

- Real-world Scenario Challenges: Implementing text spotting in real-world scenarios, such as diverse lighting conditions, complex backgrounds, and low-resolution images, poses significant hurdles to achieving reliable results.

- Requirements for Real-time Processing: The demand for real-time processing in VLPR cases necessitates efficient algorithms and hardware capabilities, introducing challenges in achieving both speed and accuracy simultaneously

- Training Dataset Diversity: Successful implementation of text spotting systems depends heavily on the availability of large and diverse datasets for training, covering variations in lighting conditions, fonts, and backgrounds of license plate images.

Summary

The exploration of text spotting in Vehicle License Plate Recognition (VLPR) highlights its crucial role in automating the identification and extraction of text information from license plates.

Despite the benefits, challenges persist in achieving robust and reliable results, particularly in real-world scenarios. The variability in text characteristics, challenges posed by diverse scenarios, multilingual complexities, real-time processing requirements, and the necessity for diverse training datasets are crucial aspects that need attention for the successful implementation of text spotting technology in VLPR systems.

Addressing these challenges is essential to unlock the full potential of text spotting and enhance the accuracy and efficiency of VLPR systems in practical applications.

bagikan

ARTIKEL TERKAIT